Azure Container Apps (ACA) are great to work with when everything behaves. So much that it’s beginning to be my go-to for solution design when enabling microservices in Azure (replacing potentially complex AKS scenarios).

The ingress abstraction is particularly nice. Deploy your container spec, flip on ingress, and Azure quietly handles the ugly parts -TLS certificates, routing, scaling, health probes. It’s one of the big selling points of the service: PaaS simplicity, container flexibility.

But like any abstraction, the moment you need to do something outside the happy path, you quickly realise how little you can tweak under the hood. Most of the time, that’s fine. Sometimes, it’s not.

When Abstraction Gets In The Way

As with any abstraction layer, most of what it hides from you is a blessing. Azure Container Apps (ACA) does a great job of removing the complexity of ingress, TLS, scaling, and routing. But every now and then, you hit an edge case where that same abstraction introduces nuance – and occasionally, disadvantages.

Here’s one such case:

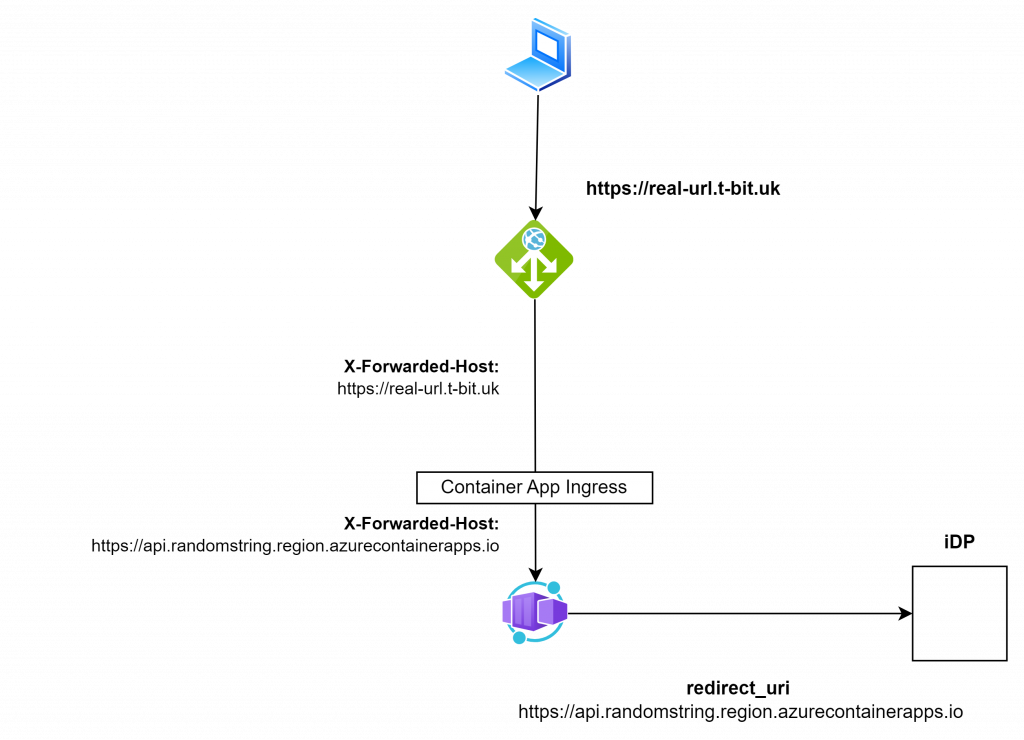

- Your Container App is running privately, with no public ingress enabled.

- You are not using Custom Domains.

- You’re fronting it with an Azure Application Gateway (with or without WAF) for public access.

- The application misbehaves when it can’t explicitly set or force its own service URI – for example, during OAuth authentication flows to an identity provider, where redirect URIs must match exactly.

In other words, the app doesn’t quite know who it is when seen through multiple layers of reverse proxying. The built-in ACA ingress controller won’t let you alter its deeper settings to fix this behaviour.

You obviously have the option to resolve the app-code, and that is typically the initial path. But I’ve now observed several public open-source components (that make up a wider solution) misbehave in this way. Yes, you could fork and fix – or submit an issue and potentially fix it yourself – but cadence is everything with Azure enablement – and that’s often sometimes not viable.

Why The Misbehaviour?

Most modern web frameworks determine their own “external identity” from request headers like Host, X-Forwarded-Host, and X-Forwarded-Proto. In a double reverse proxy setup (e.g., Application Gateway → ACA ingress → your app), those headers may not match the public hostname or protocol the app thinks it’s serving.

Without a custom domain, ACA’s ingress overwrites forwarding headers coming from Application Gateway. This can break scenarios where the app must know its original public hostname and protocol — particularly in identity flows like OAuth.

You could fix this in application code — and that’s usually the first path to try. But in practice, I’ve seen multiple public open-source components misbehave in this way. Forking and fixing is possible, but cadence matters in Azure delivery projects, and sometimes that’s simply not viable.

The Workaround: Deploy a Sidecar Ingress Controller

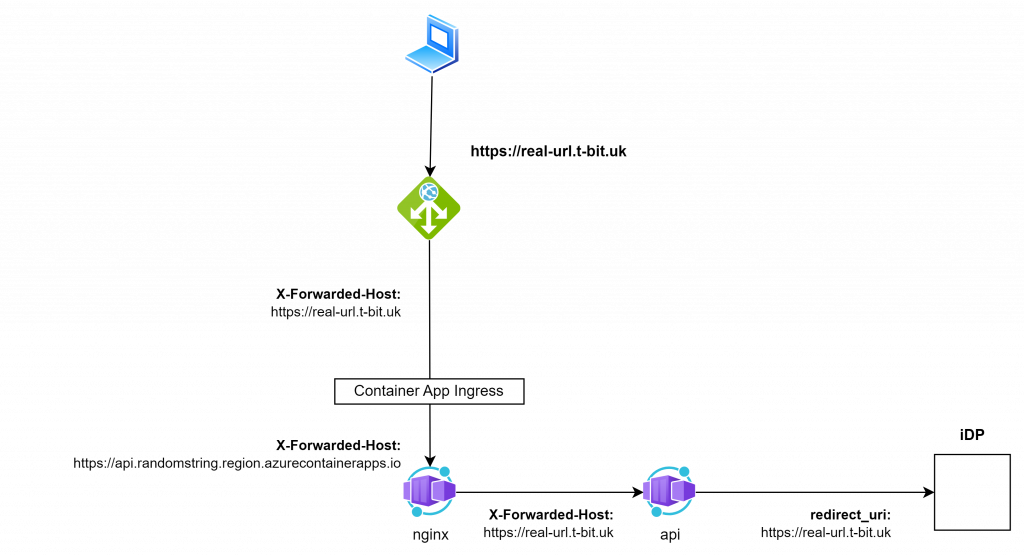

If you can’t change ACA’s ingress behaviour – bypass it. Insert your own ingress layer within the Container App, one that exposes the dials that require tuning.

- Deploy an nginx sidecar container alongside your application in the same ACA revision.

- Configure ACA ingress to send traffic to nginx, not your app.

- nginx reverse-proxies traffic to your app container, fixing headers and hostnames along the way.

You now control the ingress that your app sees. You can force X-Forwarded-* headers, set the Host to whatever the app expects, and generally make the application believe it’s being called directly at its canonical FQDN.

Keeping It Stateless

ACA workloads are designed to be stateless, so your sidecar shouldn’t break that principle. Instead of baking nginx configuration into your container image, mount it at runtime. For example:

- Store the nginx config in a native Container App secret.

- Mount the secret into the container at startup.

This keeps configuration out of the image and allows you to update routing or headers without redeploying the entire app.

This is a clean workaround, but not totally free of complexity:

- The sidecar scales exactly like your app — same scaling rules, replicas, and limits.

- For HTTP workloads, consider scaling by HTTP concurrency rather than CPU/memory to avoid bottlenecks.

- You now have two moving parts per app: the nginx container and the application container. Both need monitoring and resource tuning.

Example

Here’s a simplified example assuming you already have:

- A resource group

- A virtual network and necessary routing

- An Application Gateway (with WAF, listener, and backend config)

- A Container App Environment

Our app (API) listens on TCP port 8080.

Within our single Container App;

resource "azurerm_container_app" "api" {

name = lower("misbehaving-app")

container_app_environment_id = module.cae.id

resource_group_name = azurerm_resource_group.cae.name

revision_mode = "Single"

workload_profile_name = "Consumption"

}We define our nginx configuration through a Secret. Here is where we are setting the correct

secret {

name = "app"

value = <<EOT

events {}

error_log /dev/stderr warn;

http {

access_log /dev/stdout;

server {

listen 80;

location / {

proxy_pass http://localhost:8080;

proxy_set_header Host real-url.t-bit.uk;

proxy_set_header X-Forwarded-Host real-url.t-bit.uk;

proxy_set_header X-Forwarded-Proto https;

proxy_http_version 1.1;

}

}

}

EOT

}Within our Template – we specify both the: Misbehaving App (with the settings that SHOULD resolve the issue but don’t); Along with our nginx sidecar.

We specify nginx to start up by pointing it toward our custom configuration:

template {

max_replicas = 1

min_replicas = 1

container {

name = "api"

image = "misbehaving-app:latest"

cpu = 0.5

memory = "1Gi"

env {

name = "port"

value = "8080"

}

env {

name = "service_uri_that_doesnt_work"

value = "real-url.t-bit.uk"

}

}

container {

name = "nginx"

image = "docker.io/nginx:1.28.0"

cpu = "0.25"

memory = "0.5Gi"

args = [

"nginx",

"-g",

"daemon off;",

"-c",

"/cae/nginx/app"

]

volume_mounts {

name = "cae"

path = "/cae/nginx/app"

}

}

volume {

name = "cae"

storage_type = "Secret"

}

}We then specify the Ingress of the Container App to direct toward the nginx sidecar:

ingress {

target_port = 80

external_enabled = true

transport = "auto"

allow_insecure_connections = true

traffic_weight {

latest_revision = true

percentage = 100

}

}We now observe the correct flow:

Conclusion

Azure Container Apps provide an excellent abstraction for running container workloads, removing the need to manage ingress controllers, TLS, or scaling logic yourself. In most cases, the built-in ingress is more than enough. But in identity-sensitive scenarios – OAuth, SAML, or custom API integrations — you may need finer control over headers and hostname behaviour than ACA’s managed ingress allows.

A sidecar ingress controller such as nginx gives you that control without abandoning ACA for AKS. It’s a great way to preserve the PaaS benefits while taming those tricky applications that don’t play nicely with double reverse proxies.

More broadly, abstraction in cloud services is almost always a net positive: it accelerates delivery, reduces operational overhead, and removes a whole class of problems. But there will always be edge cases where that same abstraction becomes a constraint. In those cases, it’s worth exploring whether parts of the abstraction can be used creatively to solve the problem – while carefully weighing the complexity this introduces. There’s a tipping point where a workaround stops making sense and a change in architecture becomes the better option.

The key is recognising where that line sits for your application, your team, and your wider platform strategy – and making that decision deliberately as part of the solution architecture.